Nvidia today announced that its new Ampere-based data center GPUs, the A100 Tensor Core GPUs, are now available in alpha on Google Cloud. As the name implies, these GPUs were designed for AI workloads, as well as data analytics and high-performance computing solutions.

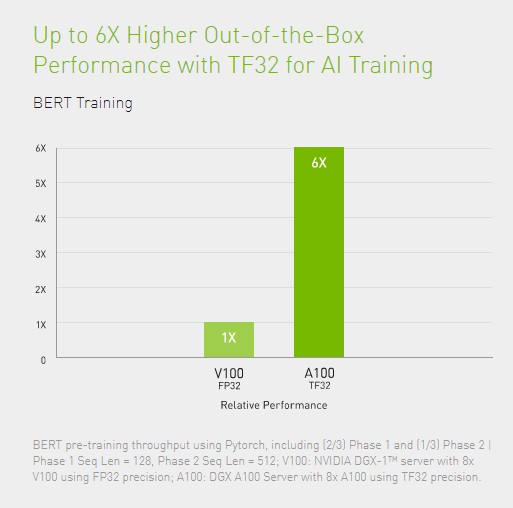

The A100 promises a significant performance improvement over previous generations. Nvidia says the A100 can boost training and inference performance by over 20x compared to its predecessors (though you’ll mostly see 6x or 7x improvements in most benchmarks) and tops out at about 19.5 TFLOPs in single-precision performance and 156 TFLOPs for Tensor Float 32 workloads.

“Google Cloud customers often look to us to provide the latest hardware and software services to help them drive innovation on AI and scientific computing workloads,” said Manish Sainani, Director of Product Management at Google Cloud, in today’s announcement. “With our new A2 VM family, we are proud to be the first major cloud provider to market NVIDIA A100 GPUs, just as we were with NVIDIA’s T4 GPUs. We are excited to see what our customers will do with these new capabilities.”

“Google Cloud customers often look to us to provide the latest hardware and software services to help them drive innovation on AI and scientific computing workloads,” said Manish Sainani, Director of Product Management at Google Cloud, in today’s announcement. “With our new A2 VM family, we are proud to be the first major cloud provider to market NVIDIA A100 GPUs, just as we were with NVIDIA’s T4 GPUs. We are excited to see what our customers will do with these new capabilities.”

Google Cloud users can get access to instances with up to 16 of these A100 GPUs, for a total of 640GB of GPU memory and 1.3TB of system memory.