Following years of backlash over its algorithms and their ability to push people to more extreme content, which Facebook continues to deny, the company today announced it would give its users new tools to more easily switch over to non-algorithmic views of their News Feed. This includes the recently launched “Favorites,” which shows you posts from up to 30 of your favorite friends and Pages, as well as the “Most Recent” view, which shows posts in chronological order. It also introduced new controls for adjusting who can comment on your posts, and other changes.

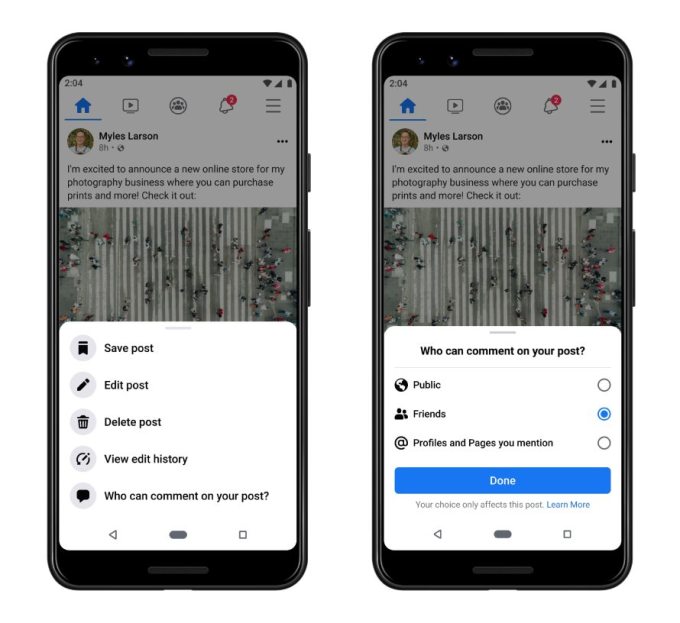

The features themselves aren’t entirely new, in some cases, but they’ve been made easier to get to with the addition of a Feed Filter Bar on mobile for changing the view of the News Feed, and an option menu on your posts to control who can comment.

The “Most Recent” view of the News Feed has long existed but has been buried in the extended “more” menu (the three-bar hamburger icon) on the Facebook mobile app. It’s not as useful as it sounds because it shows you all the posts from both friends and Pages in a single chronological view. If you’ve been on Facebook for many years, then you’ve probably “Liked” a number of Facebook Pages for brands, businesses and public figures. These Pages tend to post with more frequency than your friends, so the feed has become largely a long scroll through Page updates.

However, if you still prefer the “Most Recent” view, the Feed Filter Bar will give you a tool to easier switch back and forth between this and other views. The feature will launch on Android first, then roll out to iOS.

Meanwhile, Facebook has offered a way to prioritize who you see in your News Feed through a “See First” setting, but the newer “Favorites” feature rebrands this effort and gives you a single destination under Settings to select and deselect your Favorites, including favorite Pages.

The updated commenting controls are a new take on a habit many Facebook users have already adopted — when they share a post only to a given audience, like family or friends, while excluding other groups like work colleagues or even specific people. Now, users will have the option to instead share their posts but control who can engage in conversations. Public figures, for example, may choose to adopt the feature to restrict their audience to only those brands and profiles they’ve tagged.

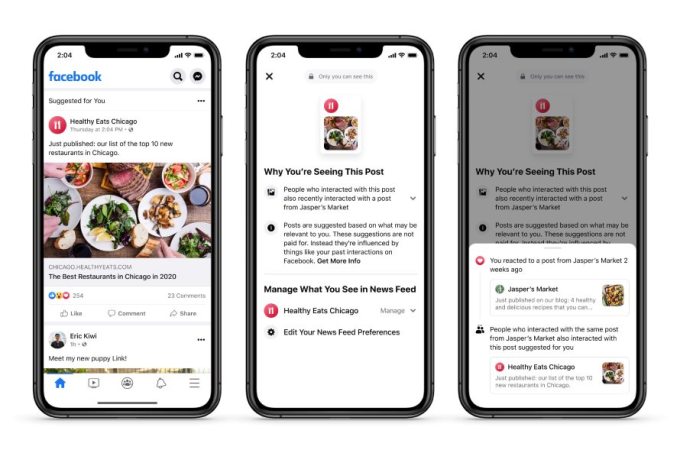

Facebook says it will also show more context around suggestions it displays in the News Feed with its “Why am I seeing this?” feature that will explain how its algorithmic suggestions work. It says several factors may be at work here, in terms of what’s shown and why — including your location, whether you or people like you have engaged with related topics, groups or Pages, and more.

The changes arrive at a time when Facebook, along with other tech giants, has come under fire for its role in spreading misinformation leading to deadly events, like the storming of the U.S. Capitol, and serious public health crises, like vaccine hesitancy during a pandemic. Facebook CEO Mark Zuckerberg last week testified before the House’s Subcommittee on Communications and Technology about its failures to remove dangerous misinformation and its allowing of extremists to become more radicalized and to organize online.

Facebook’s official position, however, is that it doesn’t play a role in directing people towards problematic content — they seek it out. And people’s News Feeds are only a reflection of their own choices, in that way.

These thoughts and more were detailed today by Nick Clegg, VP of Global Affairs for Facebook, where he insists personalization algorithms are common across tech companies — Amazon and Netflix use them, too, for instance. And ranking simply makes what’s most relevant to the user appear first — effectively blaming users for the problems here. He also throws back the decisions to be made around Facebook’s role in misinformation peddling to the lawmakers, adding: “It would clearly be better if these [content] decisions were made according to frameworks agreed by democratically accountable lawmakers.”