The scale of supercomputing has grown almost too large to comprehend, with millions of compute units performing calculations at rates requiring, for first time, the exa prefix — denoting quadrillions per second. How was this accomplished? With careful planning… and a lot of wires, say two people close to the project.

Having noted the news that Intel and Argonne National Lab were planning to take the wrapper off a new exascale computer called Aurora (one of several being built in the U.S.) earlier this year, I recently got a chance to talk with Trish Damkroger, head of Intel’s Extreme Computing Organization, and Rick Stevens, Argonne’s associate lab director for computing, environment and life sciences.

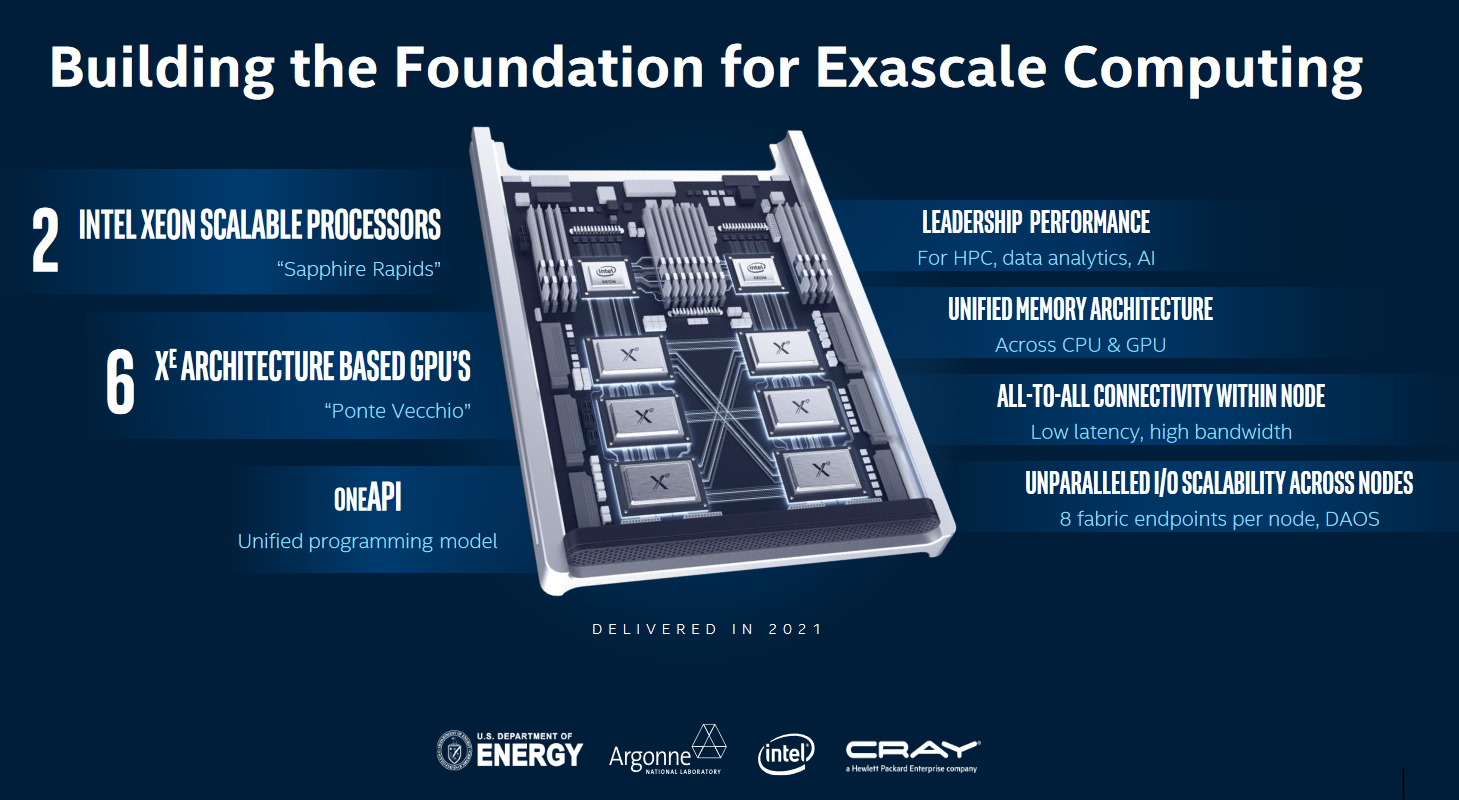

The two discussed the technical details of the system at the Supercomputing conference in Denver, where, probably, most of the people who can truly say they understand this type of work already were. So while you can read at industry journals and the press release about the nuts and bolts of the system, including Intel’s new Xe architecture and Ponte Vecchio general-purpose compute chip, I tried to get a little more of the big picture from the two.

It should surprise no one that this is a project long in the making — but you might not guess exactly how long: more than a decade. Part of the challenge, then, was to establish computing hardware that was leagues beyond what was possible at the time.

“Exascale was first being started in 2007. At that time we hadn’t even hit the petascale target yet, so we were planning like three to four magnitudes out,” said Stevens. “At that time, if we had exascale, it would have required a gigawatt of power, which is obviously not realistic. So a big part of reaching exascale has been reducing power draw.”

Intel’s supercomputing-focused Xe architecture is based on a 7-nanometer process, pushing the very edge of Newtonian physics — much smaller and quantum effects start coming into play. But the smaller the gates, the less power they take, and microscopic savings add up quickly when you’re talking billions and trillions of them.

But that merely exposes another problem: If you increase the power of a processor by 1000x, you run into a memory bottleneck. The system may be able to think fast, but if it can’t access and store data equally fast, there’s no point.

“By having exascale-level computing, but not exabyte-level bandwidth, you end up with a very lopsided system,” said Stevens.

And once you clear both those obstacles, you run into a third: what’s called concurrency. High performance computing is equally about synchronizing a task between huge numbers of computing units as it is about making those units as powerful as possible. The machine operates as a whole, and as such every part must communicate with every other part — which becomes something of a problem as you scale up.

“These systems have many thousands of nodes, and the nodes have hundreds of cores, and the cores have thousands of computation units, so there’s like, billion-way concurrency,” Stevens explained. “Dealing with that is the core of the architecture.”

How they did it, I, being utterly unfamiliar with the vagaries of high performance computing architecture design, would not even attempt to explain. But they seem to have done it, as these exascale systems are coming online. The solution, I’ll only venture to say, is essentially a major advance on the networking side. The level of sustained bandwidth between all these nodes and units is staggering.

Making exascale accessible

While even in 2007 you could predict that we’d eventually reach such low-power processes and improved memory bandwidth, other trends would have been nearly impossible to predict — for example, the exploding demand for AI and machine learning. Back then it wasn’t even a consideration, and now it would be folly to create any kind of high performance computing system that wasn’t at least partially optimized for machine learning problems.

“By 2023 we expect AI workloads to be a third of the overall HPC server market,” said Damkroger. “This AI-HPC convergence is bringing those two workloads together to solve problems faster and provide greater insight.”

To that end the architecture of the Aurora system is built to be flexible while retaining the ability to accelerate certain common operations, for instance the type of matrix calculations that make up a great deal of certain machine learning tasks.

“But it’s not just about performance, it has to be about programmability,” she continued. “One of the big challenges of an exacale machine is being able to write software to use that machine. oneAPI is going to be a unified programming model — it’s based on an open standard of Open Parallel C++, and that’s key for promoting use in the community.”

Summit, as of this writing the most powerful single computing system in the world, is very dissimilar to many of the systems developers are used working on. If the creators of a new supercomputer want it to have broad appeal, they need to bring it as close to being like a “normal” computer to operate as possible.

“It’s something of a challenge to bring x86-based packages to Summit,” Stevens noted. “The big advantage for us is that, because we have x86 nodes and Intel GPUs, this thing is basically going to run every piece of software that exists. It’ll run standard software, Linux software, literally millions of apps.”

I asked about the costs involved, since it’s something of a mystery with a system like this how that a half-billion dollar budget gets broken down. Really I just thought it would be interesting to know how much of it went to, say, RAM versus processing cores, or how many miles of wire they had to run. Though both Stevens and Damkroger declined to comment, the former did note that “the backlink bandwidth on this machine is many times the total of the entire internet, and that does cost something.” Make of that what you will.

Aurora, unlike its cousin El Capitan at Lawrence Livermore National Lab, will not be used for weapons development.

“Argonne is a science lab, and it’s open, not classified science,” said Stevens. “Our machine is a national user resource; We have people using it from all over the country. A large amount of time is allocated via a process that’s peer reviewed and priced to accommodate the most interesting projects. About two thirds is that, and the other third Department of Energy stuff, but still unclassified problems.”

Initial work will be in climate science, chemistry, and data science, with 15 teams between them signed up for major projects to be run on Aurora — details to be announced soon.