You’ll need to prick up your ears for this slice of deepfakery emerging from the wacky world of synthesized media: A digital version of Albert Einstein — with a synthesized voice that’s been (re)created using AI voice cloning technology drawing on audio recordings of the famous scientist’s actual voice.

The startup behind the “uncanny valley” audio deepfake of Einstein is Aflorithmic (whose seed round we covered back in February).

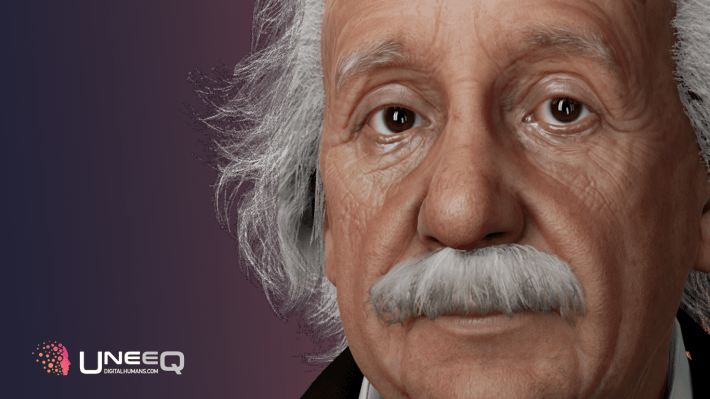

While the video engine powering the 3D character rending components of this “digital human” version of Einstein is the work of another synthesized media company — UneeQ — which is hosting the interactive chatbot version on its website.

Alforithmic says the “digital Einstein” is intended as a showcase for what will soon be possible with conversational social commerce. Which is a fancy way of saying deepfakes that make like historical figures will probably be trying to sell you pizza soon enough, as industry watchers have presciently warned.

The startup also says it sees educational potential in bringing famous, long-deceased figures to interactive “life”.

Or, well, an artificial approximation of it — the “life” being purely virtual and Digital Einstein’s voice not being a pure tech-powered clone either; Alforithmic says it also worked with an actor to do voice modelling for the chatbot (because how else was it going to get Digital Einstein to be able to say words the real-deal would never even have dreamt of saying — like, er, “blockchain”?). So there’s a bit more than AI artifice going on here too.

“This is the next milestone in showcasing the technology to make conversational social commerce possible,” Alforithmic’s COO Matt Lehmann told us. “There are still more than one flaws to iron out as well as tech challenges to overcome but overall we think this is a good way to show where this is moving to.”

In a blog post discussing how it recreated Einstein’s voice the startup writes about progress it made on one challenging element associated with the chatbot version — saying it was able to shrink the response time between turning around input text from the computational knowledge engine to its API being able to render a voiced response, down from an initial 12 seconds to less than three (which it dubs “near-real-time”). But it’s still enough of a lag to ensure the bot can’t escape from being a bit tedious.

Laws that protect people’s data and/or image, meanwhile, present a legal and/or ethical challenge to creating such “digital clones” of living humans — at least not without asking (and most likely paying) first.

Of course historical figures aren’t around to ask awkward questions about the ethics of their likeness being appropriated for selling stuff (if only the cloning technology itself, at this nascent stage). Though licensing rights may still apply — and do in fact in the case of Einstein.

“His rights lie with the Hebrew University of Jerusalem who is a partner in this project,” says Lehmann, before ‘fessing up to the artist licence element of the Einstein “voice cloning” performance. “In fact, we actually didn’t clone Einstein’s voice as such but found inspiration in original recordings as well as in movies. The voice actor who helped us modelling his voice is a huge admirer himself and his performance captivated the character Einstein very well, we thought.”

Turns out the truth about high-tech “lies” is itself a bit of a layer cake. But with deepfakes it’s not the sophistication of the technology that matters so much as the impact the content has — and that’s always going to depend upon context. And however well (or badly) the faking is done, how people respond to what they see and hear can shift the whole narrative — from a positive story (creative/educational synthesized media) to something deeply negative (alarming, misleading deepfakes).

Concern about the potential for deepfakes to become a tool for disinformation is rising, too, as the tech gets more sophisticated — helping to drive moves toward regulating AI in Europe, where the two main entities responsible for “Digital Einstein” are based.

Earlier this week a leaked draft of an incoming legislative proposal on pan-EU rules for “high risk” applications of artificial intelligence included some sections specifically targeted at deepfakes.

Under the plan, lawmakers look set to propose “harmonised transparency rules” for AI systems that are designed to interact with humans and those used to generate or manipulate image, audio or video content. So a future Digital Einstein chatbot (or sales pitch) is likely to need to unequivocally declare itself artificial before it starts faking it — to avoid the need for internet users to have to apply a virtual Voight-Kampff test.

For now, though, the erudite-sounding interactive Digital Einstein chatbot still has enough of a lag to give the game away. Its makers are also clearly labelling their creation in the hopes of selling their vision of AI-driven social commerce to other businesses.