Snapchat today is rolling out its first set of parental controls, after announcing last October it was developing tools that would allow parents to gain better visibility into how their teens used the social networking app. The update follows the launches of similar parental control features across other apps favored by teens, including Instagram, TikTok and YouTube.

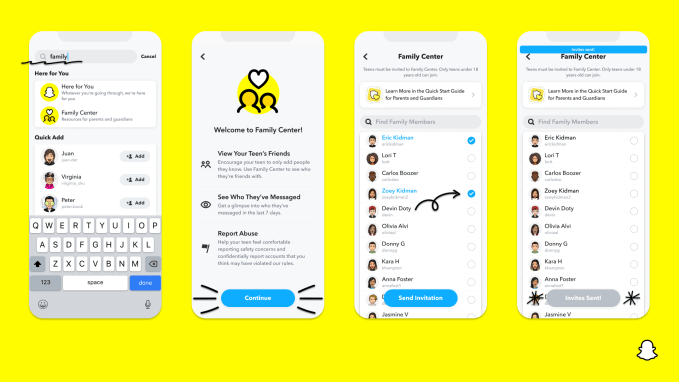

To use the new feature, known as Family Center, parents or guardians will need to install the Snapchat app on their own device in order to link their account to their teens through an opt-in invite process.

Once configured, parents will be able to see which accounts the teen is having conversations with on the app over the past seven days, without being able to view the content of those messages. They’ll also be able to view the teen’s friend list and report potential abuse to Snap’s Trust & Safety team for review. These are essentially the same features TechCrunch reported earlier this year were in development.

Parents can access the new controls either from the app’s Profile Settings or by searching for “family center” or related terms from the app’s Search feature.

Snap notes the feature is only available to parents and teens aged 13 through 18 as the app is not meant to be used by younger users. The launch comes on the heels of increased pressure on social networks to better protect their minor users from harm both in the U.S. and abroad. This has led big tech companies to introduce parental controls and other safety features to comply with E.U. laws and expected U.S. regulations.

Other social networks have introduced more expansive parental controls compared with what’s available at launch from Snapchat’s Family Center. For example, TikTok allows parents to set screen time controls, enable a more “restricted mode” for younger users, turn off search, set accounts to private, restrict messaging as well as who can view the teen’s likes and who can comment on their posts, among other things. Instagram also includes support for time limits set by parents alongside its parental controls.

Snap, however, points out that it doesn’t require as many parental controls because of how its app was designed in the first place.

Image Credits: Snap

By default, teens have to be mutual friends to begin communicating — so there’s a reduced risk of them receiving unwanted messages from potential predators. Friend lists are private and teens aren’t allowed to have public profiles. In addition, teenage users only show up as “Suggested Friends” or in search results when they have mutual friends in common with the user on the app, which also limits their exposure.

That said, parents’ concern over Snapchat isn’t limited to fears of unwanted contact between teens and potentially dangerous adults.

At its core, Snapchat’s disappearing messages feature makes it easier for teens to engage in bullying, abuse and other inappropriate behavior, like sexting. As a result, Snap has been the subject of multiple lawsuits from grieving parents whose teens committed suicide. They claim that Snap’s platform helped facilitate online bullying, which has since led the company to revamp its policies and limit access to its developer tools. It also cut off friend-finding apps which had encouraged users to share their personal information with strangers — a common avenue for child predators to reach younger, vulnerable Snapchat users.

Sexting has also been an issue of multiple lawsuits. Most recently, a teenage girl initiated a class-action lawsuit against Snapchat which alleges its designers have done nothing to protect against the sexual exploitation of girls using its service.

Image Credits: Snap

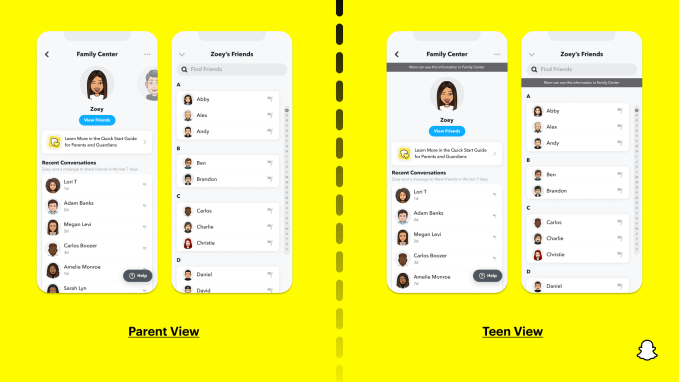

With Snapchat’s new Family Center, the company is giving parents some insight into teens’ use of the app — but not enough to fully prevent abuse or exploitation, as it favors maintaining the teen’s privacy.

For parents, the ability to view a teen’s friends’ list doesn’t necessarily help them understand if those contacts are safe. And parents don’t always know the names of all their teens’ classmates and acquaintances, only those of their closer friends. Snap also doesn’t allow parents to block their teens from sending photos to friends privately, nor has it implemented a feature similar to Apple’s iMessage technology which automatically intervenes to warn parents when sexually explicit images are being sent in chats. (Though it does now tap into CSAI Matching technology to remove known abuse material.)

The Family Center also offers no controls over if and how their teen can engage with the app’s Spotlight feature, a TikTok clone of short videos. Nor can parents control whether or not their teen’s live location can be shared on the in-app Snap Map. And parents can’t control who their teens can add as friends.

The company’s Discover section is ignored by the parental controls, as well.

In a Congressional hearing last year, Snap had been asked to defend why some content in its Discover section was clearly aimed at adults — like invites to sexualized video games, articles about going to bars or those about porn, and other items that seemed out of sync with the app’s age rating of 13+. The new Family Center offers no control over this part of the app, which includes a sizable amount of clickbait content.

We’ve found this section consistently features intentionally shocking photos and medical images — similar to the low-value clickbait articles and ads you’ll see smattered across the web.

At the time of writing, a quick scroll through Discover uncovered various articles designed to frighten or alarm — at least three articles featured photos of giant spiders. Another was about a parent who murdered her children. One story focused on Japan’s suicide forest and another was about people who died at theme parks. There was also a story of a teacher caught “cheating with” (its words) a 12-year-old student — a truly disgusting way to title a story about child sexual abuse. And there were multiple photos of rare medical conditions that should probably be left to a doctor’s viewing, not shown to younger teens.

Snap says a future update will introduce “content controls” for parents and the ability for teens to notify their parents when they report an account or a piece of content to Snap’s safety team.

“While we closely moderate and curate both our content and entertainment platforms, and don’t allow unvetted content to reach a large audience on Snapchat, we know each family has different views on what content is appropriate for their teens and want to give them the option to make those personal decisions,” a Snap spokesperson said of the upcoming parental control features.

The company added it would continue to add other controls after gaining more feedback from parents and teens.