The tools of modern cinema have become increasingly accessible to independent and even amateur filmmakers, but realistic CG characters (like them or not) have remained the province of big-budget projects. Wonder Dynamics aims to change that with a platform that lets creators literally drag and drop a CG character into any scene as if it was professionally captured and edited.

Yes, it sounds a bit like overpromising. Your skepticism is warranted, but as a skeptic myself I have to say I was extremely impressed with what the startup showed of Wonder Studio, the company’s web-based editor. This isn’t a toy like an AR filter — it’s a full-scale tool, and one that co-founders Nikola Todorovic and Tye Sheridan have longed for themselves. And most importantly, it’s meant to make artists’ jobs easier, not replace them outright.

“The goal all along was to make a tool for artists, to empower them. Someone who has big dreams doesn’t always have the resources to manifest them,” said Sheridan, whom many will have seen starring in Spielberg’s film adaptation of Ready Player One — so his familiarity with the complexities of CG-assisted production and motion capture are very much firsthand.

Todorovic and Sheridan have known and worked with each other for years and frequently hit this wall: “Both Tye and I were writing films we couldn’t afford to make,” said Todorovic. Their company, which has operated mostly in stealth until now, raised a $2.5 million seed round in early 2021 and an additional $10 million A round later that year.

The thing is, although software for creating 3D models, editing, compositing, and coloring (among other steps in the filmmaking process) are much easier to buy and use these days, the process for actually putting a CG character in a scene is still very complicated.

Wonder Dynamics founders Nikola Todorovic, left, and Tye Sheridan, right.

Say you want to include a robot companion for a scene in your sci-fi film. An artist making a model and textures and so on is only the very first step. Unless you want to hand-animate it (not recommended!), you’ll need a motion capture studio or on-set gear, reflector balls, green screens and everything. From those, motion primitives need to be applied to the CG skeleton, and the character substituted for the actor. But then the 3D model needs to match the direction and color of the lighting, the cast and grain of the film, and more. Hopefully you hired people to capture and characterize those as well.

Unless they happen to be an expert in all of these individual pre- and post-production processes and have a hell of a lot of time on their hands, it’s simply out of scope and budget for most filmmakers. At the end of the day you could be looking at as much as $20,000 per second for major VFX work, like adding a dragon or superhero, not to mention days worth of technical labor. So indie films tend not to have prominent VFX at all, let alone fully animated characters.

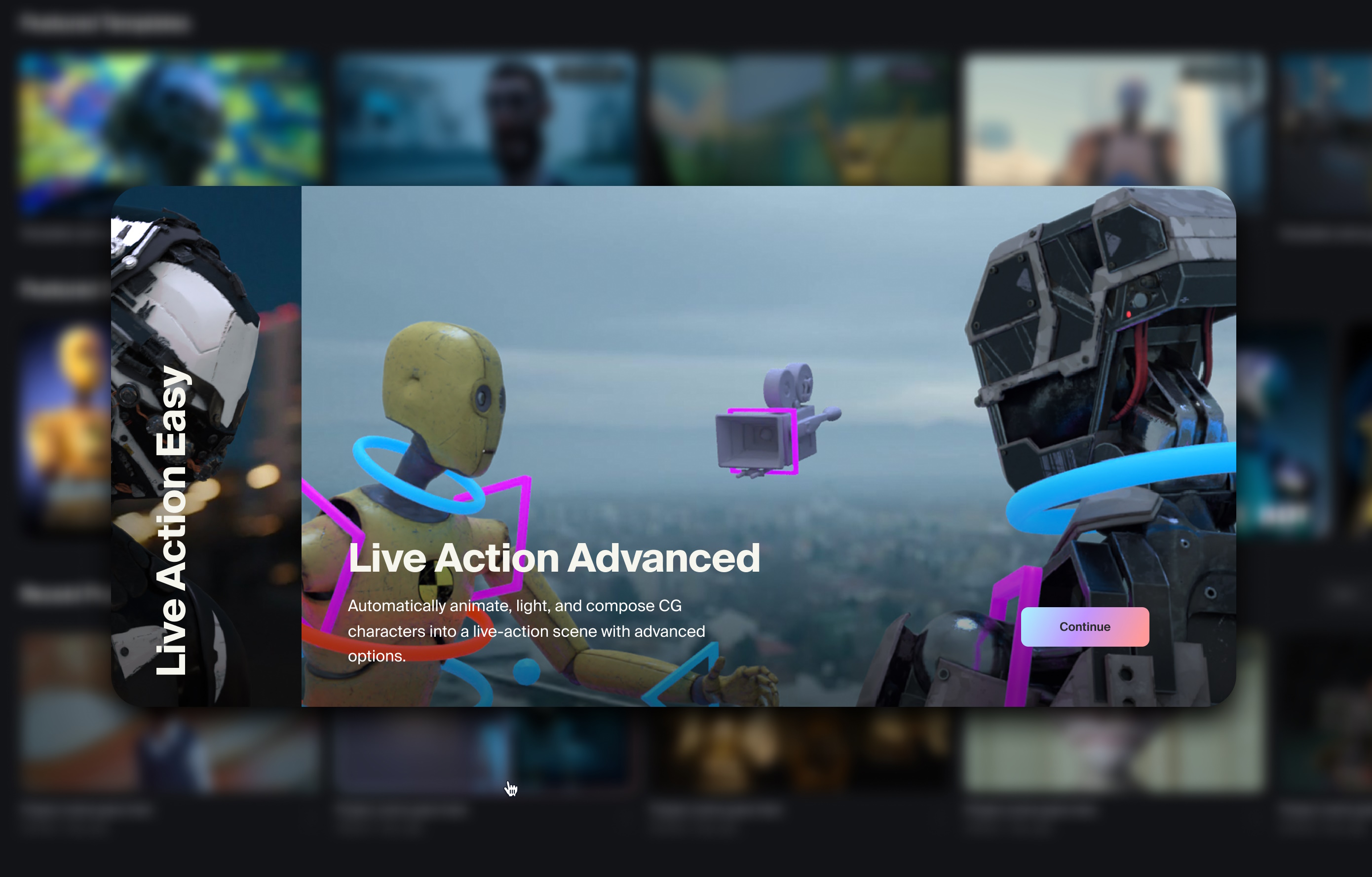

Wonder Studio is a platform that makes this process as simple as selecting a filter or brush in Photoshop. It sounds too good to be true, but Sheridan and Todorovic have been working on it for three years now and the results show it. “We wanted to build something foundational — that’s why it took so long,” said Sheridan.

“We built something that automates this whole process, animates it live, frame by frame, there’s no need for mocap. It automatically detects actors based on a single camera. It does camera motion, lighting, color, replaces the actor fully with CG,” Todorovic explained.

But crucially, it doesn’t just do this live in camera by pasting it on there a la Tiktok, or spit out a questionably “final” product. All the actual pieces that VFX artists would normally create or interact with are still generated. And, he was careful to point out, none of it is trained on artists’ existing work.

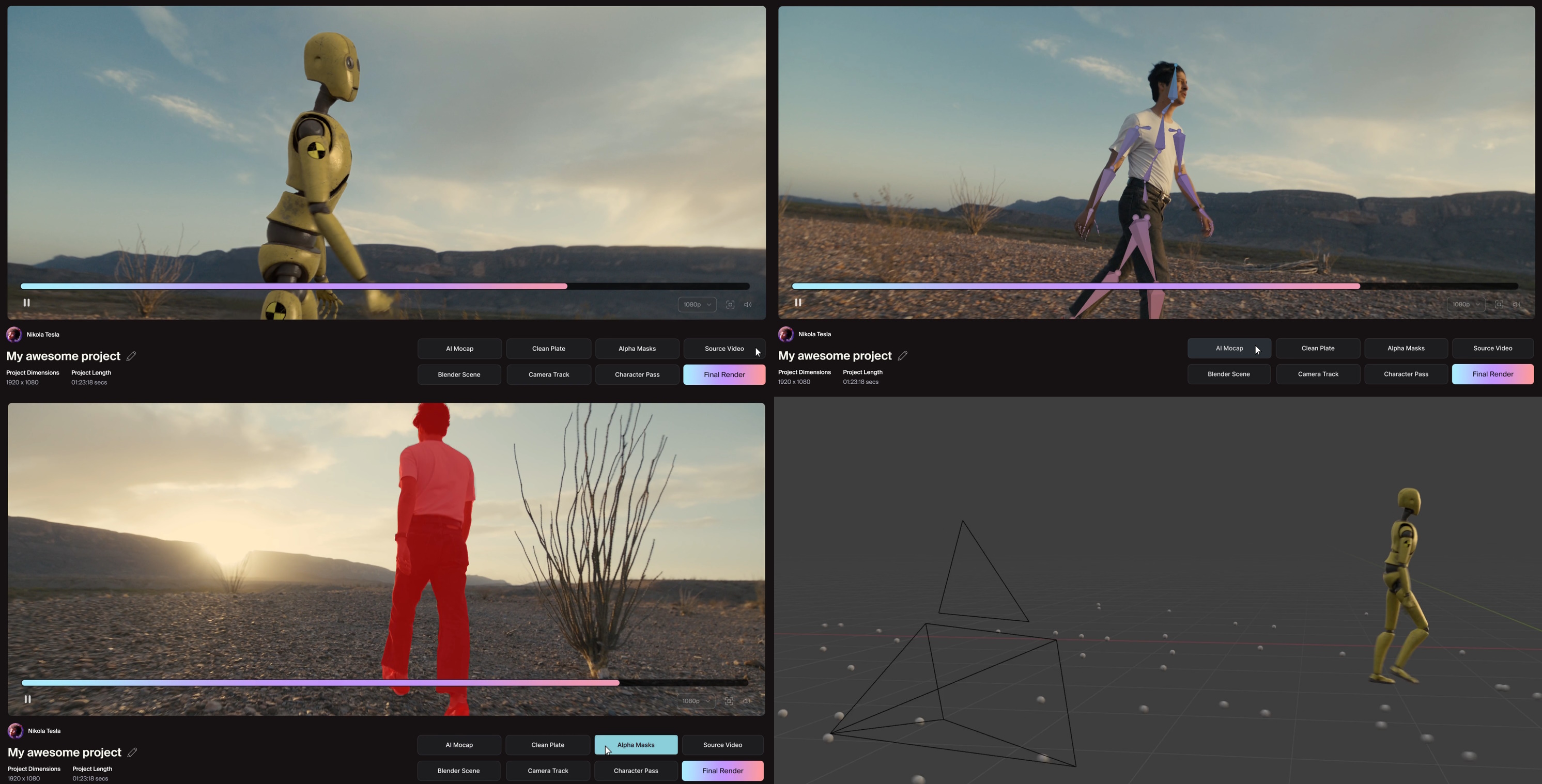

Final shot, mocap data, mask, and 3D environment generated by Wonder Studio.

“You get mocap, clean plate, masks, blender scene, it analyzes the noise and grain,” he continued. That is: animation and motion data (including hands and face), the shot without the actor or replacement, outlines of characters and objects frame by frame, a 3D representation of the environment with terrain and other features. And it’s all matched automatically to the qualities of the shot — or shots, since it can track actors across a full scene with multiple angles.

Here’s a look at how the process works in action:

Lots more examples and details can be seen at the company’s site.

Much of this kind of thing is rote work, much of it drudgery, done the same way over and over as part of the overall VFX process. These “objective non-creative” tasks — so called because they are technical necessities rather than expressive outcomes — would definitely fall under the “dull” in “dirty, dull, and dangerous”: the three D’s of automation.

“You can’t replace artists with AI, we’re about enhancing and empowering them. This doesn’t disrupt what they’re doing; it automates 80-90% of the objective VFX work and leaves them with the subjective work,” Todorovic explained. “The beauty of AI is taking something so complicated and simplifying it.”

Lest you worry about whether this will put lots of people out of a job, it doesn’t seem likely. The VFX industry is positively overwhelmed with work, especially as companies like Marvel and Netflix insist on regular releases of incredibly demanding CG work that must be done by dozens of independent VFX houses. One does the capes, another the explosions, another the digital makeup, yet another deformable surfaces. And they’re all booked out for years. Sure, they’re paying the bills, but if they could take on twice as many jobs because they spend half as much time manually outlining a target actor on every frame, they would probably do it in a second.

It all depends on the quality of the results, of course, and that’s where automation of these processes tends to fall down. Sure, you can get a tool that automatically detects motion in the selected actor and outputs a rough animation for a model. But you have to tell it which actor in every shot, and once you have all that, it doesn’t magically become a CG character; it must go to the next team, who knows what to do with a bunch of coordinates and Bezier curves.

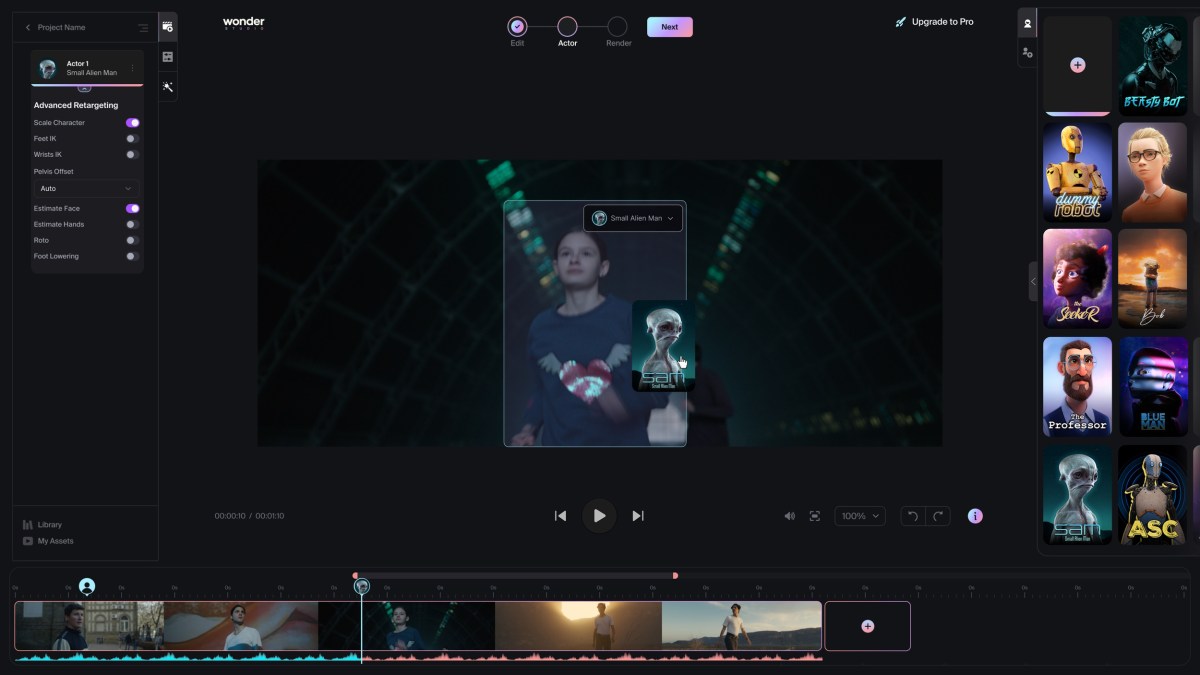

Sheridan and Todorovic wanted Wonder Studio to be a system that’s simple enough for a kid to use, but powerful enough for a VFX professional — hence the raw data output if you want it, but drag-and-drop functionality out of the box.

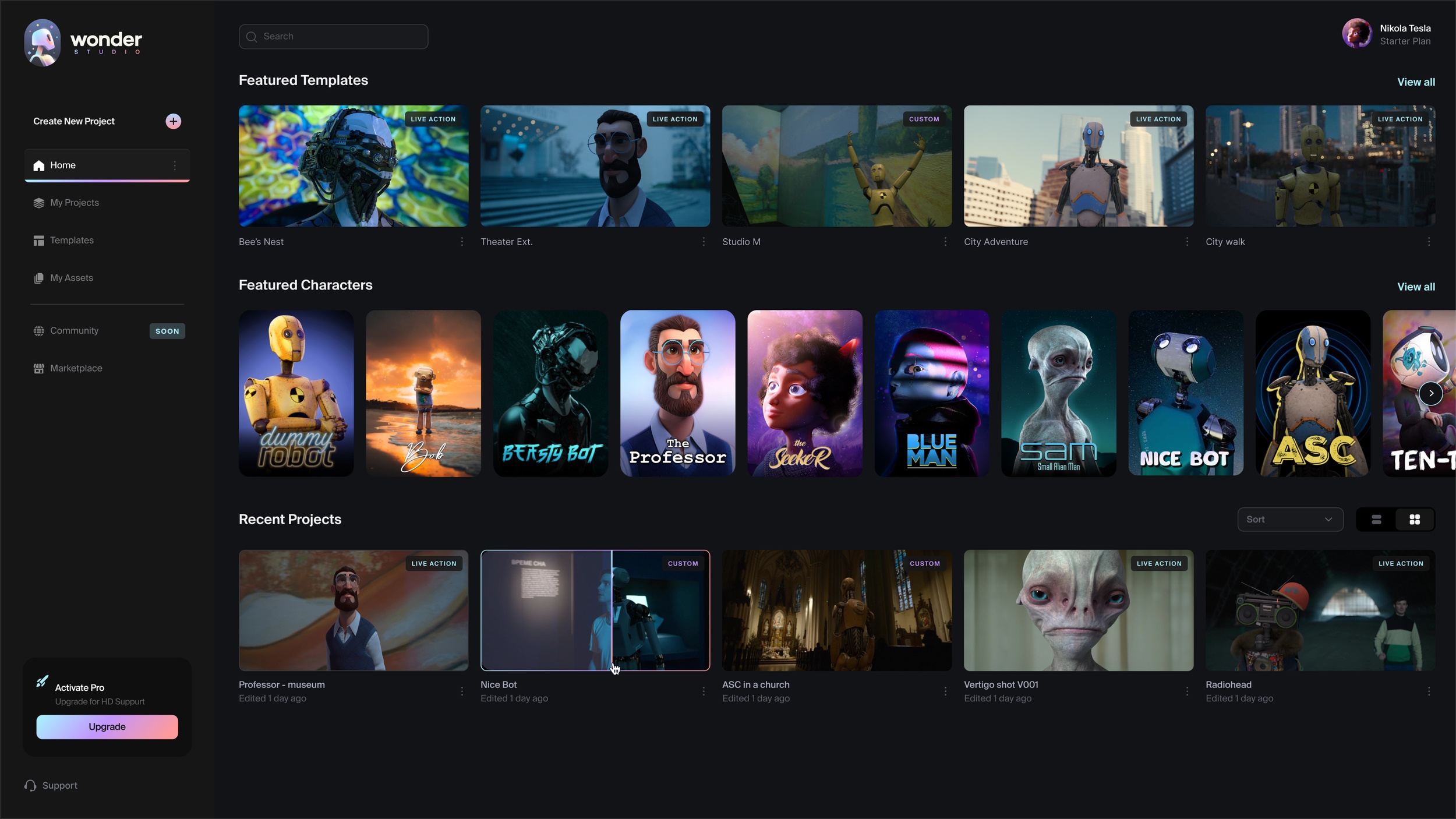

Wonder Studio comes with a bunch of sample models out of the box, but no one is expected to just use these in their movie — it’s more like a set of CG character archetypes you can see in action: a Pixar-type dad and daughter, a couple robots of varying seriousness, a suspiciously Gollum-like “Sam” (small alien man), and more.

“We want artists to create their own. You get your FBX [a common 3D media format] and your textures and it’s assembled on the platform, just like you would import in Blender or Unreal or whatever else,” said Todorovic. Models can also be made available for purchase on the platform.

Even these basic elements could be enough for a pitch, rough cut, or previsualization, though, the latter of which is often done by stunt staff with almost no VFX whatsoever. Wouldn’t it be great to see what the Wolverine costume or slimy sewer monster would actually look like in a fight choreography you’re proposing, rather than just in the storyboards? How about a fantasy village with actual bird people instead of bad makeup? Or a short sci-fi film that only needs a robotic cashier or mutant street sweeper to give it a casual futuristic flair — right now it’s not even an option. Entry level CG characters open up entire genres that were locked behind big budgets.

More features are planned, from CG environments characters can be composited into, to capturing camera motions of any media so they can be simulated, studied, tweaked and reused.

“This is a big first step, but the big picture is we want to have a platform where any kid can sit and direct films by sitting at his computer and typing,” said Sheridan. A free tier will be offered for anyone to try, and more advanced features for professionals and heavy users will be available at various paid plans. They plan to work directly with VFX houses to create and improve integrations and workflows.

Their hope is that this begins to bridge the divide between low- and no-budget filmmaking and the kind of no-holds-barred imaginative flights that only someone with James Cameron’s resources can conceive, let alone execute on.

The platform is in beta form now but is already being used by acclaimed action directors the Russo Brothers for an upcoming Netflix movie with Millie Bobby Brown and Chris Pratt. No word on who’s being replaced with what, but it’s a powerful endorsement nonetheless.

“I may be biased, but making movies has to be one of the coolest jobs you could ever have,” said Sheridan in a release announcing Wonder Studio’s debut. “We are storytellers at heart, and we’re only building technology as a means to help us tell better stories. AI presents a huge opportunity for more films to be made and for more voices to be heard.”